Introduction

Reservoir is first and foremost a memory system for interactions with large language models, designed to build a Retrieval-Augmented Generation (RAG) database of useful context from language model interactions over time. It maintains conversation history in a Neo4j graph database and automatically injects relevant context into requests based on semantic similarity and recency. Reservoir can also act as an optional stateful proxy server for OpenAI-compatible Chat Completions APIs.

Problem Statement

By default , Language Model interactions are stateless. Each request must include the complete conversation history for the model to maintain context. This creates several technical challenges:

- Manual conversation state management: Applications must implement their own conversation storage and retrieval systems

- Token limit constraints: As conversations grow, they exceed model token limits

- Inability to reference semantically related conversations: Previous relevant discussions cannot be automatically incorporated

- No persistent storage: Conversation data is lost when applications terminate

Technical Solution

Reservoir addresses these limitations by acting as an intermediary layer that:

- Stores all messages in a Neo4j graph database with full conversation history

- Computes embeddings using BGE-Large-EN-v1.5 for semantic similarity calculation

- Creates semantic relationships (synapses) between messages when cosine similarity exceeds 0.85

- Automatically injects relevant context into new requests based on similarity and recency

- Manages token limits through intelligent truncation while preserving system and user messages

Architecture Overview

Reservoir is a command line tool that intercepts API calls, enriches them with relevant context, and forwards requests to the target Language Model provider. It can also run as an HTTP proxy, acting as an intermediary between clients and API endpoints. All conversation data remains local to the deployment environment.

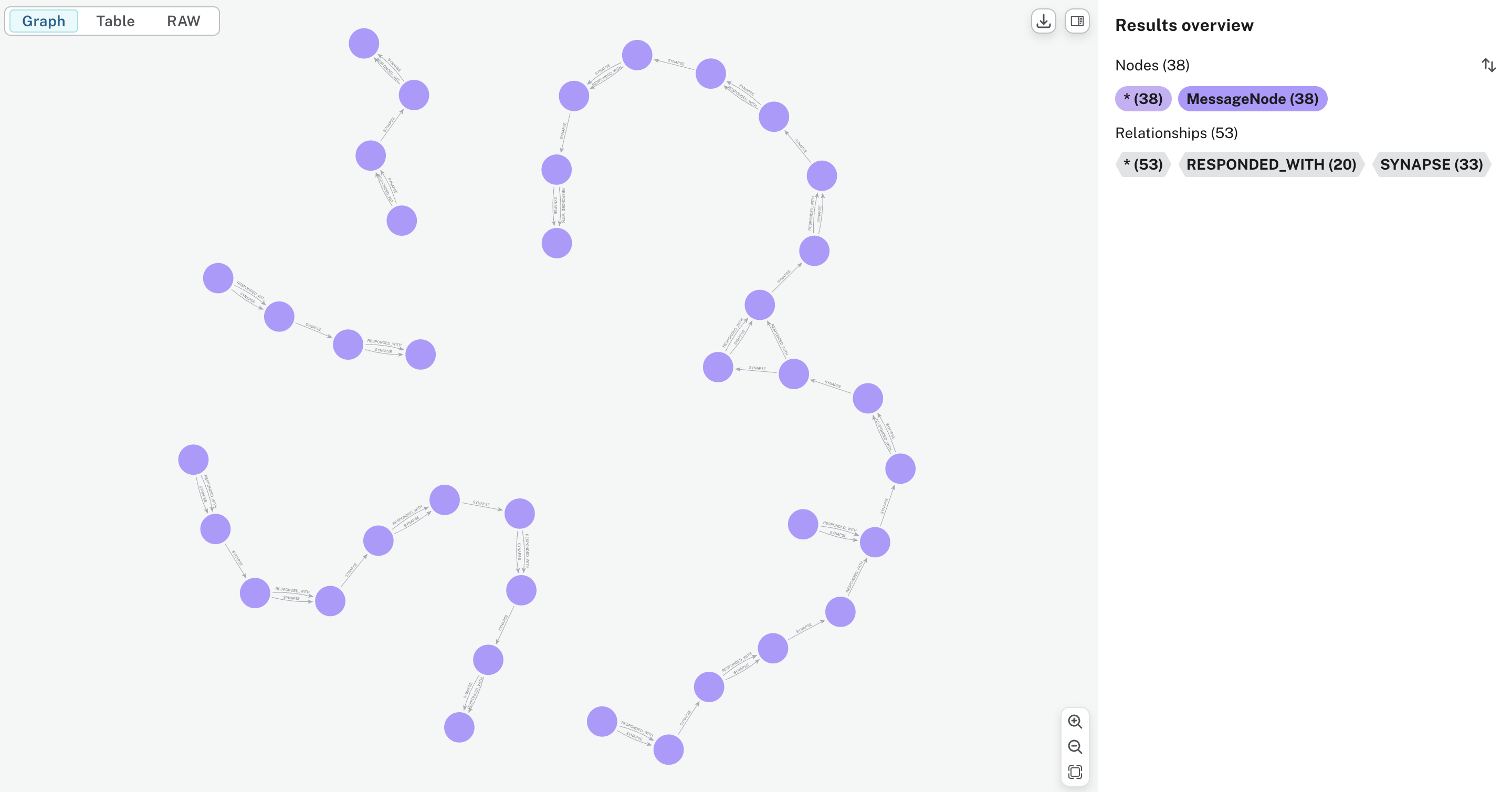

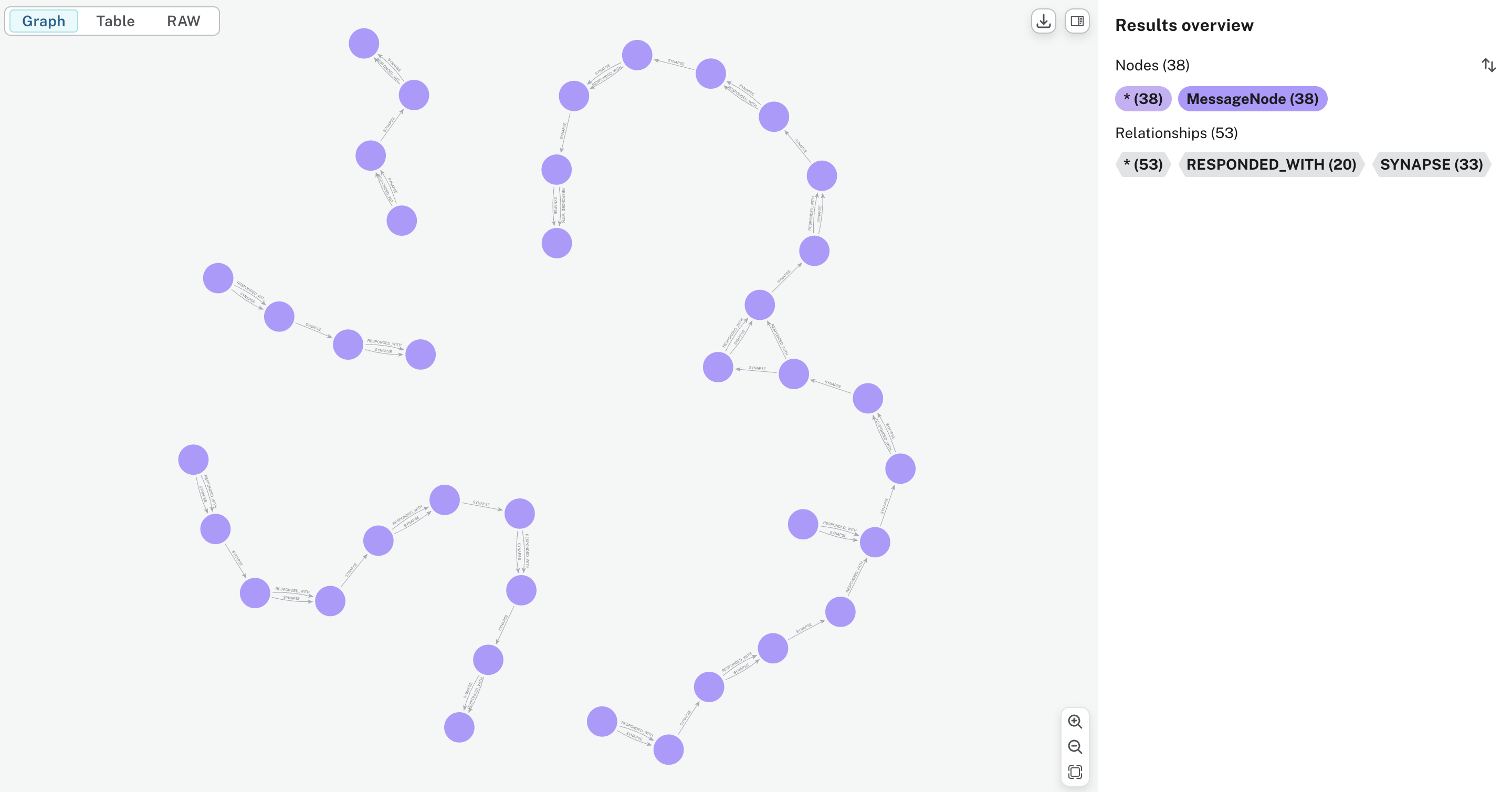

Data Model

Conversations are stored as a graph structure:

- MessageNode: Individual messages with metadata and embeddings

- EmbeddingNode: Vector representations for semantic search operations

- SYNAPSE: Relationships between semantically similar messages

- RESPONDED_WITH: Sequential conversation flow relationships

- HAS_EMBEDDING: Message-to-embedding associations

Supported Providers (Proxy Mode)

The system supports multiple Language Model providers through a unified interface:

- OpenAI (gpt-4, gpt-4o, gpt-3.5-turbo)

- Ollama (local model execution)

- Mistral AI

- Google Gemini

- Any OpenAI-compatible endpoint

Implementation Details

The server initializes a vector index in Neo4j for efficient semantic search and listens on a configurable port (default: 3017). Conversations are organized using a partition/instance hierarchy enabling multi-tenant isolation.

Use Cases

- Stateful chat applications: Eliminate manual conversation state management

- Cross-session context: Maintain context across application restarts

- Semantic search: Retrieve relevant historical conversations

- Multi-provider workflows: Maintain context when switching between Language Model providers

- Research and development: Build persistent knowledge bases from Language Model interactions

For implementation details, see the Quick Start guide.

Getting Started

Welcome to Reservoir! This section will guide you through everything you need to get up and running with Reservoir quickly and efficiently.

What You'll Learn

In this section, you'll learn how to:

- Install Reservoir - Set up Reservoir on your system with all prerequisites

- Quick Start - Get Reservoir running in minutes with basic configuration

- Your First Chat - Send your first LLM conversation through Reservoir

Prerequisites

Before you begin, make sure you have:

- Neo4j database running (local or remote)

- Rust toolchain installed (for building from source)

- API keys for your preferred LLM providers (OpenAI, Mistral, etc.)

Getting Help

If you run into any issues during setup, check out our Help & Support section for troubleshooting guides and frequently asked questions.

Let's get started!

Installation

This guide will walk you through installing and setting up Reservoir on your system.

Prerequisites

Before installing Reservoir, make sure you have the following dependencies installed:

Required Dependencies

-

Rust and Cargo (latest stable version)

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh source ~/.cargo/env -

Neo4j Database (version 4.4 or later)

Option A: Using Docker (Recommended)

docker run \ --name neo4j \ -p7474:7474 -p7687:7687 \ -d \ -v $HOME/neo4j/data:/data \ -v $HOME/neo4j/logs:/logs \ -v $HOME/neo4j/import:/var/lib/neo4j/import \ -v $HOME/neo4j/plugins:/plugins \ --env NEO4J_AUTH=neo4j/password \ neo4j:latestOption B: Native Installation

- Download from Neo4j Download Center

- Follow the installation instructions for your operating system

Optional Dependencies

-

mdBook (for building documentation)

cargo install mdbook -

Hurl (for running API tests)

# macOS brew install hurl # Linux curl --location --remote-name https://github.com/Orange-OpenSource/hurl/releases/latest/download/hurl_amd64.deb sudo dpkg -i hurl_amd64.deb

Installing Reservoir

From Source (Recommended)

-

Clone the repository

git clone https://github.com/divanvisagie/reservoir.git cd reservoir -

Build the project

cargo build --release -

Install the binary (optional)

cargo install --path .

Using Cargo Install

Once Reservoir is published to crates.io, you'll be able to install it directly:

cargo install reservoir

Configuration

Environment Variables

Create an .env file in your project directory or set these environment variables:

# Neo4j Configuration

NEO4J_URI=bolt://localhost:7687

NEO4J_USERNAME=neo4j

NEO4J_PASSWORD=password

# Server Configuration

RESERVOIR_PORT=3017

RESERVOIR_HOST=127.0.0.1

# API Keys (set as needed)

OPENAI_API_KEY=your-openai-key-here

MISTRAL_API_KEY=your-mistral-key-here

GEMINI_API_KEY=your-gemini-key-here

# Custom Provider Endpoints (optional)

RSV_OPENAI_BASE_URL=https://api.openai.com/v1/chat/completions

RSV_OLLAMA_BASE_URL=http://localhost:11434/v1/chat/completions

RSV_MISTRAL_BASE_URL=https://api.mistral.ai/v1/chat/completions

Using direnv (Recommended)

If you're using direnv, you can create a .envrc file:

# .envrc

export NEO4J_URI=bolt://localhost:7687

export NEO4J_USERNAME=neo4j

export NEO4J_PASSWORD=password

export RESERVOIR_PORT=3017

export OPENAI_API_KEY=your-openai-key-here

Then activate it:

direnv allow

Verification

1. Check Neo4j Connection

Make sure Neo4j is running and accessible:

# If using Docker

docker ps | grep neo4j

# Test connection (replace with your credentials)

curl -u neo4j:password http://localhost:7474/db/data/

2. Start Reservoir

# From the repository directory

cargo run -- start

# Or if you installed the binary

reservoir start

You should see output similar to:

2024-01-01T12:00:00Z [INFO] Initializing vector index in Neo4j...

2024-01-01T12:00:01Z [INFO] Server starting on http://127.0.0.1:3017

3. Test the Installation

Run the included tests to verify everything is working:

# Test all endpoints

./hurl/test.sh

# Or test individual endpoints

hurl --variable USER="$USER" --variable OPENAI_API_KEY="$OPENAI_API_KEY" hurl/chat_completion.hurl

4. Simple API Test

Test with a basic curl request:

curl "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Hello, Reservoir!"

}

]

}'

Troubleshooting Installation

Common Issues

Neo4j Connection Failed

- Verify Neo4j is running:

docker psor check your local Neo4j service - Check credentials in your environment variables

- Ensure ports 7474 and 7687 are not blocked

Cargo Build Fails

- Update Rust:

rustup update - Clear cargo cache:

cargo clean - Check for system dependency issues

Port Already in Use

- Change the port:

export RESERVOIR_PORT=3018 - Kill existing processes:

lsof -ti:3017 | xargs kill

API Key Issues

- Verify your API keys are set correctly:

echo $OPENAI_API_KEY - Check for extra whitespace or quotes in environment variables

Getting Help

If you encounter issues:

- Check the Troubleshooting section

- Review the server logs for detailed error messages

- Verify all prerequisites are properly installed

- Test with the simplest possible configuration first

Next Steps

Once Reservoir is installed and running:

- Follow the Getting Started guide

- Try the Chat Gipitty Integration

- Explore the API Reference

- Check out Usage Examples

Quick Start

This guide will get you up and running with Reservoir in just a few minutes.

Before You Begin

Make sure you have:

- Reservoir installed (see Installation)

- Neo4j running locally

- At least one API key configured (OpenAI, Mistral, or Gemini)

Step 1: Start the Server

Open a terminal and start Reservoir:

cargo run -- start

You should see:

[INFO] Initializing vector index in Neo4j for semantic search

[INFO] Server starting on http://127.0.0.1:3017

Keep this terminal open - Reservoir is now running and ready to handle requests.

Step 2: Your First Chat Request

Open a new terminal and send your first chat request:

curl "http://127.0.0.1:3017/partition/$USER/instance/quickstart/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Hello! What is Reservoir?"

}

]

}'

The response will look like a standard OpenAI API response, but Reservoir has:

- Stored your message and the LLM's response

- Tagged them with your username and "quickstart" instance

- Made them available for future context enrichment

Step 3: See the Memory in Action

Send a follow-up question that references your previous conversation:

curl "http://127.0.0.1:3017/partition/$USER/instance/quickstart/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Can you elaborate on what you just told me?"

}

]

}'

Notice how the LLM understands "what you just told me" - that's Reservoir automatically injecting the previous conversation context!

Step 4: View Your Conversation History

Check what Reservoir has stored:

cargo run -- view 5 --partition "$USER" --instance quickstart

You'll see output like:

2024-01-01T12:00:00+00:00 [abc123] user: Hello! What is Reservoir?

2024-01-01T12:00:01+00:00 [abc123] assistant: Reservoir is a memory system for AI conversations...

2024-01-01T12:01:00+00:00 [def456] user: Can you elaborate on what you just told me?

2024-01-01T12:01:01+00:00 [def456] assistant: Certainly! Let me expand on Reservoir's capabilities...

Step 5: Try Different Models

Reservoir supports multiple providers. Try Ollama (no API key needed):

curl "http://127.0.0.1:3017/partition/$USER/instance/quickstart/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.2",

"messages": [

{

"role": "user",

"content": "What did we discuss earlier about Reservoir?"

}

]

}'

Even though you're using a different model (Ollama instead of OpenAI), Reservoir still provides the conversation context!

Understanding the URL Structure

The Reservoir API endpoint follows this pattern:

http://localhost:3017/partition/{partition}/instance/{instance}/v1/chat/completions

- Partition: Organizes conversations (typically your username)

- Instance: Sub-organizes within a partition (like "quickstart", "work", "personal")

- This keeps different contexts separate while allowing context sharing within each space

What Just Happened?

- Storage: Every message (yours and the LLM's) was stored in Neo4j

- Context Enrichment: Reservoir automatically found relevant past messages and included them in requests

- Multi-Provider: You used both OpenAI and Ollama with the same conversation history

- Organization: Your conversations were organized by partition and instance

Next Steps

Now that you've seen Reservoir in action, explore:

- Chat Gipitty Integration - Add memory to your existing cgip setup

- Python Integration - Use with the OpenAI Python library

- API Reference - Detailed API documentation

- Features - Learn about advanced features

Quick Reference

Common Commands

# Start the server

cargo run -- start

# View recent messages

cargo run -- view 10 --partition $USER --instance myapp

# Export conversations

cargo run -- export > backup.json

# Import conversations

cargo run -- import backup.json

# Search conversations

cargo run -- search "your query" --partition $USER

Environment Variables

export RESERVOIR_PORT=3017 # Server port

export NEO4J_URI=bolt://localhost:7687 # Neo4j connection

export OPENAI_API_KEY=your-key-here # OpenAI API key

export MISTRAL_API_KEY=your-key-here # Mistral API key

Ready to dive deeper? Check out the Usage Examples or learn about Chat Gipitty Integration!

Your First Chat

This guide will walk you through sending your first message through Reservoir and demonstrate how its memory and context features work.

Prerequisites

Before starting, make sure you have:

- Reservoir server running (

cargo run -- start) - Neo4j database accessible

- API keys set up (if using cloud providers)

Example 1: Testing with Ollama (Local)

Let's start with a local Ollama model since it doesn't require API keys:

Step 1: Send your first message

curl "http://127.0.0.1:3017/partition/$USER/instance/first-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Hello! My name is Alice and I love programming in Python."

}

]

}'

Step 2: Ask a follow-up question

Now ask something that requires memory of the previous conversation:

curl "http://127.0.0.1:3017/partition/$USER/instance/first-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "What programming language do I like?"

}

]

}'

Magic! The Language Model will remember that you like Python, even though you didn't include the previous conversation in your request. Reservoir handled that automatically!

Step 3: Continue the conversation

curl "http://127.0.0.1:3017/partition/$USER/instance/first-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Can you suggest a Python project for someone at my skill level?"

}

]

}'

The Language Model will make suggestions based on knowing you're Alice who loves Python programming!

Example 2: Using OpenAI Models

If you have an OpenAI API key set up:

Step 1: Introduction with GPT-4

curl "http://127.0.0.1:3017/partition/$USER/instance/gpt-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Hi! I am working on a machine learning project about image classification."

}

]

}'

Step 2: Ask for specific help

curl "http://127.0.0.1:3017/partition/$USER/instance/gpt-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "What neural network architecture would you recommend for my project?"

}

]

}'

GPT-4 will remember you're working on image classification and provide relevant recommendations!

Example 3: Cross-Model Conversations

One of Reservoir's unique features is that conversation context can span multiple models:

Step 1: Start with Ollama

curl "http://127.0.0.1:3017/partition/$USER/instance/cross-model/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "I am learning about quantum computing basics."

}

]

}'

Step 2: Switch to GPT-4

curl "http://127.0.0.1:3017/partition/$USER/instance/cross-model/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Can you explain quantum superposition in more detail?"

}

]

}'

GPT-4 will know you're learning quantum computing and provide an explanation appropriate to your level!

Understanding the Results

What Reservoir Does Behind the Scenes

When you send a message, Reservoir:

- Stores your message in Neo4j with embeddings

- Searches for relevant context from previous conversations

- Injects relevant history into your request automatically

- Forwards the enriched request to the Language Model provider

- Stores the Language Model's response for future context

Viewing Your Conversation History

You can see your stored conversations using the CLI:

# View last 5 messages in the first-chat instance

cargo run -- view 5 --partition $USER --instance first-chat

Sample output:

2025-06-21T09:10:01+00:00 [abc123] user: Hello! My name is Alice and I love programming in Python.

2025-06-21T09:10:02+00:00 [abc123] assistant: Hello Alice! It's great to meet a fellow Python enthusiast...

2025-06-21T09:11:10+00:00 [def456] user: What programming language do I like?

2025-06-21T09:11:12+00:00 [def456] assistant: You mentioned that you love programming in Python!

2025-06-21T09:12:00+00:00 [ghi789] user: Can you suggest a Python project for someone at my skill level?

Testing Different Scenarios

Scenario 1: Different Partitions

Try organizing conversations by topic using different partitions:

# Work-related conversations

curl "http://127.0.0.1:3017/partition/work/instance/coding/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{"model": "gemma3", "messages": [{"role": "user", "content": "I need help debugging a React component."}]}'

# Personal learning

curl "http://127.0.0.1:3017/partition/personal/instance/learning/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{"model": "gemma3", "messages": [{"role": "user", "content": "I want to learn guitar playing."}]}'

Each partition maintains separate conversation history!

Scenario 2: Web Search Integration

If using a model that supports web search:

curl "http://127.0.0.1:3017/partition/$USER/instance/research/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4o-search-preview",

"messages": [{"role": "user", "content": "What are the latest trends in AI development?"}],

"web_search_options": {"enabled": true, "max_results": 5}

}'

Common Issues and Solutions

Server Not Responding

# Check if Reservoir is running

curl http://127.0.0.1:3017/health

# If not running, start it

cargo run -- start

"Model not found" Error

- For Ollama models: Make sure Ollama is running and the model is installed

- For cloud models: Check your API keys are set correctly

Empty Responses

- Check your internet connection for cloud providers

- Verify the model name is spelled correctly

- Ensure your API key has sufficient credits

Next Steps

Now that you've sent your first chat, explore these features:

- Python Integration - Use Reservoir from Python code

- Partitioning & Organization - Organize your conversations

- Chat Gipitty Integration - Add memory to your existing chat tools

- API Reference - Learn about advanced features

Congratulations! You've successfully used Reservoir to have a conversation with persistent memory. The Language Model now remembers everything from your conversation and can reference it in future chats!

Usage & Integration

Reservoir is designed to work seamlessly with your existing AI workflows and tools. This section covers various ways to integrate and use Reservoir in your projects.

Integration Options

Chat Applications

- Chat Gipitty Integration - Add persistent memory to your Chat Gipitty conversations

- Python with OpenAI Library - Use Reservoir with the popular OpenAI Python client

Direct API Usage

- Curl Examples - Command-line examples for testing and scripting

- Ollama Integration - Use Reservoir with local Ollama models

Common Use Cases

- Multi-session conversations - Maintain context across different chat sessions

- Cross-application memory - Share conversation history between different tools

- Local AI workflows - Keep conversations private while using local models

- Research and development - Build applications that learn from past interactions

Choosing Your Integration

- New to AI development? Start with Chat Gipitty Integration

- Python developer? Check out Python with OpenAI Library

- Command-line user? Try the Curl Examples

- Privacy-focused? Use Ollama Integration for fully local conversations

Each integration method maintains the same core benefits: persistent memory, context enrichment, and seamless AI conversations.

Ollama Client Integration

You can use reservoir as a memory system for the Ollama command line client by integrating it with a simple bash script.

You can place the following function in your ~/.bashrc or ~/.zshrc file and it will use reservoir to

- Fetch context from the model

- Prepend the context to your query

- Send the request to the model

- Save the output

function contextual_ollama_with_ingest() {

local user_query="$1"

# Validate input

if [ -z "$user_query" ]; then

echo "Usage: contextual_ollama_with_ingest 'Your question goes here'" >&2

return 1

fi

# Ingest the user's query into Reservoir

echo "$user_query" | reservoir ingest

# Generate dynamic system prompt with context

local system_prompt_content=$(

echo "the following is info from semantic search based on your query:"

reservoir search "$user_query" --semantic --link

echo "the following is recent history:"

reservoir view 10

)

local full_prompt_content=$(

echo "You are a helpful assistant. Use the following context to answer the user's question."

echo "$system_prompt_content"

echo "User's question: ${user_query}"

)

# Call cgip with enriched context

local assistant_response=$(ollama run gemma3 "$full_prompt_content")

# Store the assistant's response

echo "$assistant_response" | reservoir ingest --role assistant

# Display the response

echo "$assistant_response"

}

# Create a convenient alias

alias olm='contextual_ollama_with_ingest'

By adhering to POSIX standards, reservoir become the semantic memory for any shell interaction with a language model.

Chat Gipitty Integration

Reservoir was originally designed as a memory system for Chat Gipitty. This integration gives your cgip conversations persistent memory, context awareness, and the ability to search through your LLM interaction history.

What You Get

When you integrate Reservoir with Chat Gipitty, you get:

- Persistent Memory: Your conversations are remembered across sessions

- Semantic Search: Find relevant past discussions automatically

- Context Enrichment: Each response is informed by your conversation history

- Multi-Model Support: Switch between different LLM providers while maintaining context

Setup

Prerequisites

- Chat Gipitty installed and working

- Reservoir installed and running (see Installation)

- Your shell configured (bash or zsh)

Installation

Add this function to your ~/.bashrc or ~/.zshrc file:

function contextual_cgip_with_ingest() {

local user_query="$1"

# Validate input

if [ -z "$user_query" ]; then

echo "Usage: contextual_cgip_with_ingest 'Your question goes here'" >&2

return 1

fi

# Ingest the user's query into Reservoir

echo "$user_query" | reservoir ingest

# Generate dynamic system prompt with context

local system_prompt_content=$(

echo "the following is info from semantic search based on your query:"

reservoir search "$user_query" --semantic --link

echo "the following is recent history:"

reservoir view 10

)

# Call cgip with enriched context

local assistant_response=$(cgip "${user_query}" --system-prompt="${system_prompt_content}")

# Store the assistant's response

echo "$assistant_response" | reservoir ingest --role assistant

# Display the response

echo "$assistant_response"

}

# Create a convenient alias

alias gpty='contextual_cgip_with_ingest'

After adding this to your shell configuration, reload it:

# For bash

source ~/.bashrc

# For zsh

source ~/.zshrc

Usage

Basic Usage

Use the function directly:

contextual_cgip_with_ingest "Explain quantum computing in simple terms"

Or use the convenient alias:

gpty "What is machine learning?"

Follow-up Questions

The magic happens with follow-up questions:

gpty "Explain neural networks"

# ... LLM responds with explanation ...

gpty "How do they relate to what we discussed about machine learning earlier?"

# ... LLM responds with context from the previous conversation ...

Different Topics

Start a new topic, and Reservoir will find relevant context:

gpty "I'm learning Rust programming"

# ... later in a different session ...

gpty "Show me some advanced Rust patterns"

# Reservoir will remember you're learning Rust and provide appropriate context

How It Works

Here's what happens when you use the integrated function:

- Query Ingestion: Your question is stored in Reservoir

- Context Gathering: Reservoir searches for:

- Semantically similar past conversations

- Recent conversation history

- Context Injection: This context is provided to cgip as a system prompt

- Enhanced Response: cgip responds with awareness of your history

- Response Storage: The LLM's response is stored for future context

Advanced Configuration

Custom Search Parameters

You can modify the function to customize how context is gathered:

function contextual_cgip_with_ingest() {

local user_query="$1"

if [ -z "$user_query" ]; then

echo "Usage: contextual_cgip_with_ingest 'Your question goes here'" >&2

return 1

fi

echo "$user_query" | reservoir ingest

# Customize these parameters

local system_prompt_content=$(

echo "=== Relevant Context ==="

reservoir search "$user_query" --semantic --link --limit 5

echo ""

echo "=== Recent History ==="

reservoir view 15 --partition "$USER" --instance "cgip"

)

local assistant_response=$(cgip "${user_query}" --system-prompt="${system_prompt_content}")

echo "$assistant_response" | reservoir ingest --role assistant

echo "$assistant_response"

}

Partitioned Conversations

Organize your conversations by topic or project:

function gpty_work() {

local user_query="$1"

if [ -z "$user_query" ]; then

echo "Usage: gpty_work 'Your work-related question'" >&2

return 1

fi

echo "$user_query" | reservoir ingest --partition "$USER" --instance "work"

local system_prompt_content=$(

echo "Context from work conversations:"

reservoir search "$user_query" --semantic --partition "$USER" --instance "work"

echo "Recent work discussion:"

reservoir view 10 --partition "$USER" --instance "work"

)

local assistant_response=$(cgip "${user_query}" --system-prompt="${system_prompt_content}")

echo "$assistant_response" | reservoir ingest --role assistant --partition "$USER" --instance "work"

echo "$assistant_response"

}

function gpty_personal() {

# Similar function for personal conversations

# ... implement similarly with --instance "personal"

}

Model Selection

Use different models while maintaining context:

function gpty_creative() {

local user_query="$1"

echo "$user_query" | reservoir ingest

local system_prompt_content=$(

reservoir search "$user_query" --semantic --link

reservoir view 5

)

# Use a creative model via cgip configuration

local assistant_response=$(cgip "${user_query}" --system-prompt="${system_prompt_content}" --model gpt-4)

echo "$assistant_response" | reservoir ingest --role assistant

echo "$assistant_response"

}

Benefits of This Integration

Continuous Learning

- Your LLM assistant learns from every interaction

- Context builds up over time, making responses more personalized

- No need to re-explain your projects or preferences

Cross-Session Memory

- Resume conversations from days or weeks ago

- Reference past decisions and discussions

- Build on previous explanations and examples

Semantic Understanding

- Ask "What did we discuss about X?" and get relevant results

- Similar topics are automatically connected

- Context is found even if you use different wording

Privacy

- All your conversation history stays local

- No data sent to external services beyond the LLM API calls

- You control your data completely

Troubleshooting

Function Not Found

Make sure you've sourced your shell configuration:

source ~/.bashrc # or ~/.zshrc

No Context Being Added

Check that Reservoir is running:

# Should show Reservoir process

ps aux | grep reservoir

# Start if not running

cargo run -- start

Empty Search Results

Build up some conversation history first:

gpty "Tell me about artificial intelligence"

gpty "What are neural networks?"

gpty "How does machine learning work?"

# Now try a search

gpty "What did we discuss about AI?"

Permission Issues

Make sure the function has access to reservoir commands:

# Test individual commands

echo "test" | reservoir ingest

reservoir view 5

reservoir search "test"

Next Steps

- Explore API Reference to understand Reservoir's capabilities

- Learn about Partitioning to organize conversations

- Check out Python Integration for programmatic access

- See Troubleshooting if you encounter issues

The Chat Gipitty integration transforms your LLM interactions from isolated conversations into a connected, searchable knowledge base that grows smarter with every interaction.

Python Integration

Reservoir works seamlessly with the popular OpenAI Python library. You simply point the client to your Reservoir instance instead of directly to OpenAI, and Reservoir handles all the memory and context management automatically.

Setup

First, install the OpenAI Python library if you haven't already:

pip install openai

Basic Configuration

import os

from openai import OpenAI

INSTANCE = "my-application"

PARTITION = os.getenv("USER")

RESERVOIR_PORT = os.getenv('RESERVOIR_PORT', '3017')

RESERVOIR_BASE_URL = f"http://localhost:{RESERVOIR_PORT}/v1/partition/{PARTITION}/instance/{INSTANCE}"

OpenAI Models

Basic Usage with OpenAI

import os

from openai import OpenAI

INSTANCE = "my-application"

PARTITION = os.getenv("USER")

RESERVOIR_PORT = os.getenv('RESERVOIR_PORT', '3017')

RESERVOIR_BASE_URL = f"http://localhost:{RESERVOIR_PORT}/v1/partition/{PARTITION}/instance/{INSTANCE}"

client = OpenAI(

base_url=RESERVOIR_BASE_URL,

api_key=os.environ.get("OPENAI_API_KEY")

)

completion = client.chat.completions.create(

model="gpt-4",

messages=[

{

"role": "user",

"content": "Write a one-sentence bedtime story about a curious robot."

}

]

)

print(completion.choices[0].message.content)

With Web Search Options

For models that support web search (like gpt-4o-search-preview), you can enable web search capabilities:

completion = client.chat.completions.create(

model="gpt-4o-search-preview",

messages=[

{

"role": "user",

"content": "What are the latest trends in machine learning?"

}

],

extra_body={

"web_search_options": {

"enabled": True,

"max_results": 5

}

}

)

Ollama Models (Local)

Using Ollama (No API Key Required)

import os

from openai import OpenAI

INSTANCE = "my-application"

PARTITION = os.getenv("USER")

RESERVOIR_PORT = os.getenv('RESERVOIR_PORT', '3017')

RESERVOIR_BASE_URL = f"http://localhost:{RESERVOIR_PORT}/v1/partition/{PARTITION}/instance/{INSTANCE}"

client = OpenAI(

base_url=RESERVOIR_BASE_URL,

api_key="not-needed-for-ollama" # Ollama doesn't require API keys

)

completion = client.chat.completions.create(

model="llama3.2", # or "gemma3", or any Ollama model

messages=[

{

"role": "user",

"content": "Explain the concept of recursion with a simple example."

}

]

)

print(completion.choices[0].message.content)

Supported Models

Reservoir automatically routes requests to the appropriate provider based on the model name:

| Model | Provider | API Key Required |

|---|---|---|

gpt-4, gpt-4o, gpt-4o-mini, gpt-3.5-turbo | OpenAI | Yes (OPENAI_API_KEY) |

gpt-4o-search-preview | OpenAI | Yes (OPENAI_API_KEY) |

llama3.2, gemma3, or any custom name | Ollama | No |

mistral-large-2402 | Mistral | Yes (MISTRAL_API_KEY) |

gemini-2.0-flash, gemini-2.5-flash-preview-05-20 | Yes (GEMINI_API_KEY) |

Note: Any model name not explicitly configured will default to using Ollama.

Environment Variables

You can customize provider endpoints and set API keys using environment variables:

import os

# Set environment variables (or use .env file)

os.environ['OPENAI_API_KEY'] = 'your-openai-key'

os.environ['MISTRAL_API_KEY'] = 'your-mistral-key'

os.environ['GEMINI_API_KEY'] = 'your-gemini-key'

# Custom provider endpoints (optional)

os.environ['RSV_OPENAI_BASE_URL'] = 'https://api.openai.com/v1/chat/completions'

os.environ['RSV_OLLAMA_BASE_URL'] = 'http://localhost:11434/v1/chat/completions'

os.environ['RSV_MISTRAL_BASE_URL'] = 'https://api.mistral.ai/v1/chat/completions'

Complete Example

Here's a complete example that demonstrates Reservoir's memory capabilities:

import os

from openai import OpenAI

def setup_reservoir_client():

"""Setup Reservoir client with proper configuration"""

instance = "chat-example"

partition = os.getenv("USER", "default")

port = os.getenv('RESERVOIR_PORT', '3017')

base_url = f"http://localhost:{port}/v1/partition/{partition}/instance/{instance}"

return OpenAI(

base_url=base_url,

api_key=os.environ.get("OPENAI_API_KEY", "not-needed-for-ollama")

)

def chat_with_memory(message, model="gpt-4"):

"""Send a message through Reservoir with automatic memory"""

client = setup_reservoir_client()

completion = client.chat.completions.create(

model=model,

messages=[

{

"role": "user",

"content": message

}

]

)

return completion.choices[0].message.content

# Example conversation that builds context

if __name__ == "__main__":

# First message

response1 = chat_with_memory("My name is Alice and I love Python programming.")

print("Assistant:", response1)

# Second message - Reservoir will automatically include context

response2 = chat_with_memory("What programming language do I like?")

print("Assistant:", response2) # Will know you like Python!

# Third message - Even more context

response3 = chat_with_memory("Can you suggest a project for me?")

print("Assistant:", response3) # Will suggest Python projects for Alice!

Benefits of Using Reservoir

When you use Reservoir with the OpenAI library, you get:

- Automatic Context: Previous conversations are automatically included

- Cross-Session Memory: Conversations persist across different Python sessions

- Smart Token Management: Reservoir handles token limits automatically

- Multi-Provider Support: Switch between different LLM providers seamlessly

- Local Storage: All your conversation data stays on your device

Next Steps

- Learn about Partitioning & Organization to organize your conversations

- Check out Token Management to understand how Reservoir handles context limits

- Explore the API Reference for more advanced usage patterns

Curl Examples

This page provides comprehensive examples of using Reservoir with curl commands. These examples are perfect for testing, scripting, or understanding the API structure.

Basic URL Structure

Instead of calling the provider directly, you call Reservoir with this URL pattern:

- Direct Provider:

https://api.openai.com/v1/chat/completions - Through Reservoir:

http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions

Where:

$USERis your system username (acts as the partition)reservoiris the instance name (you can use any name)

OpenAI Models

Basic GPT-4 Example

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Write a one-sentence bedtime story about a brave little toaster."

}

]

}'

GPT-4 with System Message

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "system",

"content": "You are a helpful assistant that explains complex topics in simple terms."

},

{

"role": "user",

"content": "Explain quantum computing to a 10-year-old."

}

]

}'

Web Search Integration

For models that support web search (like gpt-4o-search-preview):

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4o-search-preview",

"messages": [

{

"role": "user",

"content": "What are the latest developments in AI?"

}

],

"web_search_options": {

"enabled": true,

"max_results": 5

}

}'

Ollama Models (Local)

Basic Ollama Example

No API key needed for Ollama models:

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Explain quantum computing in simple terms."

}

]

}'

Using Llama Models

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.2",

"messages": [

{

"role": "user",

"content": "Write a Python function to calculate fibonacci numbers."

}

]

}'

Other Providers

Mistral AI

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $MISTRAL_API_KEY" \

-d '{

"model": "mistral-large-2402",

"messages": [

{

"role": "user",

"content": "Explain the differences between functional and object-oriented programming."

}

]

}'

Google Gemini

curl "http://127.0.0.1:3017/partition/$USER/instance/reservoir/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $GEMINI_API_KEY" \

-d '{

"model": "gemini-2.0-flash",

"messages": [

{

"role": "user",

"content": "Compare different sorting algorithms and their time complexities."

}

]

}'

Partitioning Examples

Using Different Partitions

You can organize conversations by using different partition names:

# Work conversations

curl "http://127.0.0.1:3017/partition/work/instance/coding/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Review this code for security issues"}]

}'

# Personal conversations

curl "http://127.0.0.1:3017/partition/personal/instance/creative/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Help me write a short story"}]

}'

Using Different Instances

Different instances within the same partition:

# Development instance

curl "http://127.0.0.1:3017/partition/$USER/instance/development/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Debug this Python error"}]

}'

# Research instance

curl "http://127.0.0.1:3017/partition/$USER/instance/research/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Explain machine learning concepts"}]

}'

Testing Scenarios

Test Basic Connectivity

# Simple test with Ollama (no API key needed)

curl "http://127.0.0.1:3017/partition/test/instance/basic/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "Hello, can you hear me?"}]

}'

Test Memory Functionality

Send multiple requests to see memory in action:

# First message

curl "http://127.0.0.1:3017/partition/test/instance/memory/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "My favorite color is blue."}]

}'

# Second message - should remember the color

curl "http://127.0.0.1:3017/partition/test/instance/memory/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "What is my favorite color?"}]

}'

Error Handling

Invalid Model

curl "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "nonexistent-model",

"messages": [{"role": "user", "content": "Hello"}]

}'

Missing API Key

curl "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Hello"}]

}'

# Will return error because OPENAI_API_KEY is required for GPT-4

Environment Variables

Set up your environment for easier testing:

export OPENAI_API_KEY="your-openai-key"

export MISTRAL_API_KEY="your-mistral-key"

export GEMINI_API_KEY="your-gemini-key"

export RESERVOIR_URL="http://127.0.0.1:3017"

export USER_PARTITION="$USER"

Then use in requests:

curl "$RESERVOIR_URL/partition/$USER_PARTITION/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [{"role": "user", "content": "Hello from the environment!"}]

}'

Debugging Tips

Pretty Print JSON Response

Add | jq to format the JSON response:

curl "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "Hello"}]

}' | jq

Verbose Output

Use -v flag to see request/response headers:

curl -v "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "Hello"}]

}'

Save Response

Save the response to a file:

curl "http://127.0.0.1:3017/partition/$USER/instance/test/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [{"role": "user", "content": "Hello"}]

}' -o response.json

Next Steps

- Learn about API Reference for more endpoint details

- Check out Python Integration for programmatic usage

- Explore Partitioning & Organization to organize your conversations

Ollama Integration

Reservoir works seamlessly with Ollama, allowing you to use local AI models with persistent memory and context enrichment. This is perfect for privacy-focused workflows where you want to keep all your conversations completely local.

What is Ollama?

Ollama is a tool that makes it easy to run large language models locally on your machine. It supports popular models like Llama, Gemma, and many others, all running entirely on your hardware.

Benefits of Using Ollama with Reservoir

- Complete Privacy: All conversations stay on your device

- No API Keys: No need for cloud service API keys

- Offline Capable: Works without internet connection

- Cost Effective: No usage-based charges

- Full Control: Choose exactly which models to use

Setting Up Ollama

Step 1: Install Ollama

First, install Ollama from ollama.ai:

# On macOS

brew install ollama

# On Linux

curl -fsSL https://ollama.ai/install.sh | sh

# Or download from https://ollama.ai/download

Step 2: Start Ollama Service

ollama serve

This starts the Ollama service on http://localhost:11434.

Step 3: Download Models

Download the models you want to use:

# Download Gemma 3 (Google's model)

ollama pull gemma3

# Download Llama 3.2 (Meta's model)

ollama pull llama3.2

# Download Mistral (Mistral AI's model)

ollama pull mistral

# See all available models

ollama list

Using Ollama with Reservoir

Regular Mode

By default, Reservoir routes any unrecognized model names to Ollama:

curl "http://127.0.0.1:3017/partition/$USER/instance/ollama-chat/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Explain machine learning in simple terms."

}

]

}'

No API key required!

Ollama Mode

Reservoir also provides a special "Ollama mode" that makes it a drop-in replacement for Ollama's API:

# Start Reservoir in Ollama mode

cargo run -- start --ollama

In Ollama mode, Reservoir:

- Uses the same API endpoints as Ollama

- Provides the same response format

- Adds memory and context enrichment automatically

- Makes existing Ollama clients work with persistent memory

Testing Ollama Mode

# Test with the standard Ollama endpoint format

curl "http://127.0.0.1:3017/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Hello, can you remember our previous conversations?"

}

]

}'

Popular Ollama Models

Gemma 3 (Google)

Excellent for general conversation and coding:

curl "http://127.0.0.1:3017/partition/$USER/instance/coding/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "gemma3",

"messages": [

{

"role": "user",

"content": "Write a Python function to sort a list of dictionaries by a specific key."

}

]

}'

Llama 3.2 (Meta)

Great for reasoning and complex tasks:

curl "http://127.0.0.1:3017/partition/$USER/instance/reasoning/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.2",

"messages": [

{

"role": "user",

"content": "Solve this logic puzzle: If all roses are flowers, and some flowers are red, can we conclude that some roses are red?"

}

]

}'

Mistral 7B

Efficient and good for general tasks:

curl "http://127.0.0.1:3017/partition/$USER/instance/general/v1/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "mistral",

"messages": [

{

"role": "user",

"content": "Summarize the key points of quantum computing for a beginner."

}

]

}'

Python Integration with Ollama

Using the OpenAI library with local Ollama models:

import os

from openai import OpenAI

# Setup for Ollama through Reservoir

INSTANCE = "ollama-python"

PARTITION = os.getenv("USER", "default")

RESERVOIR_PORT = os.getenv('RESERVOIR_PORT', '3017')

RESERVOIR_BASE_URL = f"http://localhost:{RESERVOIR_PORT}/v1/partition/{PARTITION}/instance/{INSTANCE}"

client = OpenAI(

base_url=RESERVOIR_BASE_URL,

api_key="not-needed-for-ollama" # Ollama doesn't require API keys

)

# Chat with memory using local model

completion = client.chat.completions.create(

model="gemma3",

messages=[

{

"role": "user",

"content": "My favorite hobby is gardening. What plants would you recommend for a beginner?"

}

]

)

print(completion.choices[0].message.content)

# Ask a follow-up that requires memory

follow_up = client.chat.completions.create(

model="gemma3",

messages=[

{

"role": "user",

"content": "What tools do I need to get started with my hobby?"

}

]

)

print(follow_up.choices[0].message.content)

# Will remember you're interested in gardening!

Environment Configuration

You can customize the Ollama endpoint if needed:

# Default Ollama endpoint

export RSV_OLLAMA_BASE_URL="http://localhost:11434/v1/chat/completions"

# Custom endpoint (if running Ollama on different port/host)

export RSV_OLLAMA_BASE_URL="http://192.168.1.100:11434/v1/chat/completions"

Performance Tips

Model Selection

- gemma3: Good balance of speed and quality

- llama3.2: Higher quality but slower

- mistral: Fast and efficient

- smaller models (7B parameters): Faster on limited hardware

- larger models (13B+): Better quality but require more resources

Hardware Considerations

- RAM: 8GB minimum, 16GB+ recommended for larger models

- GPU: Optional but significantly speeds up inference

- Storage: Models range from 4GB to 40GB+ each

Optimizing Performance

# Use GPU acceleration if available

ollama run gemma3 --gpu

# Monitor resource usage

ollama ps

Troubleshooting Ollama

Common Issues

Ollama Not Found

# Check if Ollama is running

curl http://localhost:11434/api/tags

# If not running, start it

ollama serve

Model Not Available

# List installed models

ollama list

# Pull missing model

ollama pull gemma3

Performance Issues

# Check system resources

ollama ps

# Try a smaller model

ollama pull gemma3:2b # 2B parameter version

Error Messages

- "connection refused": Ollama service isn't running

- "model not found": Model needs to be pulled with

ollama pull - "out of memory": Try a smaller model or close other applications

Combining Local and Cloud Models

One of Reservoir's strengths is seamlessly switching between local and cloud models:

import os

from openai import OpenAI

# Same client setup

client = OpenAI(base_url=RESERVOIR_BASE_URL, api_key=os.environ.get("OPENAI_API_KEY", ""))

# Start with local model for initial draft

local_response = client.chat.completions.create(

model="gemma3", # Local Ollama model

messages=[{"role": "user", "content": "Write a draft email about project updates"}]

)

# Refine with cloud model for better quality

cloud_response = client.chat.completions.create(

model="gpt-4", # Cloud OpenAI model

messages=[{"role": "user", "content": "Please improve the writing quality and make it more professional"}]

)

Both responses will have access to the same conversation context!

Next Steps

- Python Integration - Use Ollama models from Python

- Features - Multi-Provider Support - Learn about mixing different providers

- Partitioning & Organization - Organize your local conversations

- Architecture - Data Model - Understand how conversations are stored

Ready to go private? 🔒 With Ollama and Reservoir, you have a completely local AI assistant with persistent memory!

API Overview

Reservoir provides an OpenAI-compatible API endpoint that acts as a smart proxy between your application and LLM language models. This section covers the core API structure and basic usage patterns.

URL Structure

The Reservoir API follows this pattern:

/v1/partition/{partition}/instance/{instance}/chat/completions

Parameters

{partition}: A broad category for organizing conversations (e.g., project name, application name, username){instance}: A specific context within the partition (e.g., user ID, session ID, specific feature)

This structure allows you to organize conversations hierarchically and scope context enrichment appropriately.

Example URL Transformation

- Instead of:

https://api.openai.com/v1/chat/completions - Use:

http://localhost:3017/v1/partition/$USER/instance/my-application/chat/completions

Here, $USER is your system username, and my-application is your application instance. All context enrichment and history retrieval are scoped to this specific partition/instance combination.

Basic Request Structure

Reservoir maintains full compatibility with the OpenAI Chat Completions API. You can use the same request structure, headers, and parameters you would use with OpenAI directly.

Required Headers

Content-Type: application/json

Authorization: Bearer YOUR_API_KEY

Request Body

The request body follows the same format as OpenAI's Chat Completions API:

{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Your message here"

}

]

}

What Happens Behind the Scenes

When you make a request to Reservoir:

- Message Storage: Your message is stored with the specified partition/instance

- Context Enrichment: Reservoir finds relevant past conversations and recent history

- Token Management: The enriched context is checked against token limits

- Request Forwarding: The enriched request is forwarded to the appropriate LLM provider

- Response Storage: The LLM's response is stored for future context

Response Format

Responses maintain the same format as the underlying LLM provider (OpenAI, Ollama, etc.), so your existing code will work without modification.

Next Steps

- Chat Completions Endpoint - Detailed endpoint documentation

- Search & Retrieval - Finding past conversations

- Data Management - Import/export and management

- Command Line Interface - CLI usage and commands

Chat Completions Endpoint

The Chat Completions endpoint is Reservoir's core API, providing full OpenAI API compatibility with intelligent context enrichment. This endpoint automatically enhances your conversations with relevant historical context while maintaining the same request/response format as OpenAI's Chat Completions API.

Endpoint URL

POST /v1/partition/{partition}/instance/{instance}/chat/completions

URL Parameters

| Parameter | Description | Example |

|---|---|---|

partition | Top-level organization boundary | alice, project_name, $USER |

instance | Specific context within partition | coding, research, session_123 |

Example URLs

# User-specific coding assistant

POST /v1/partition/alice/instance/coding/chat/completions

# Project-specific documentation bot

POST /v1/partition/docs_project/instance/support/chat/completions

# Personal research assistant

POST /v1/partition/$USER/instance/research/chat/completions

# Default partition/instance (if not specified)

POST /v1/chat/completions # Uses partition=default, instance=default

Request Format

Headers

Content-Type: application/json

Authorization: Bearer YOUR_API_KEY

Request Body

Reservoir accepts the standard OpenAI Chat Completions request format:

{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "How do I implement error handling in async functions?"

}

]

}

Supported Models

OpenAI Models:

gpt-4.1gpt-4-turbogpt-4ogpt-4o-minigpt-3.5-turbogpt-4o-search-preview

Local Models (via Ollama):

llama3.1:8bllama3.1:70bmistral:7bcodellama:latest- Any Ollama-supported model

Message Roles

| Role | Description | Usage |

|---|---|---|

user | User input messages | Questions, requests, instructions |

assistant | LLM responses | Previous LLM responses in conversation |

system | System instructions | Behavior modification, context setting |

Context Enrichment Process

When you send a request, Reservoir automatically enhances it with relevant context:

1. Message Analysis

// Your original request

{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "How do I handle database timeouts?"

}

]

}

2. Context Discovery

Reservoir finds relevant context through:

- Semantic Search: Messages similar to "database timeouts"

- Recent History: Last 15 messages from same partition/instance

- Synapse Connections: Related discussions via SYNAPSE relationships

3. Context Injection

// Enriched request sent to the Language Model

{

"model": "gpt-4",

"messages": [

{

"role": "system",

"content": "The following is the result of a semantic search of the most related messages by cosine similarity to previous conversations"

},

{

"role": "user",

"content": "What's the best way to configure database connection pools?"

},

{

"role": "assistant",

"content": "For database connection pools, consider these settings..."

},

{

"role": "system",

"content": "The following are the most recent messages in the conversation in chronological order"

},

{

"role": "user",

"content": "I'm working on optimizing database queries"

},

{

"role": "assistant",

"content": "Here are some query optimization techniques..."

},

{

"role": "user",

"content": "How do I handle database timeouts?" // Your original message

}

]

}

Response Format

Reservoir returns responses in the standard OpenAI Chat Completions format:

{

"id": "chatcmpl-abc123",

"object": "chat.completion",

"created": 1677858242,

"model": "gpt-4",

"usage": {

"prompt_tokens": 13,

"completion_tokens": 7,

"total_tokens": 20

},

"choices": [

{

"message": {

"role": "assistant",

"content": "To handle database timeouts, you should implement retry logic with exponential backoff..."

},

"finish_reason": "stop",

"index": 0

}

]

}

Configuration and Model Selection

Environment Variables

Configure different LLM providers:

# OpenAI (default)

export OPENAI_API_KEY="your-openai-api-key"

export RSV_OPENAI_BASE_URL="https://api.openai.com/v1/chat/completions"

# Ollama (local)

export RSV_OLLAMA_BASE_URL="http://localhost:11434/v1/chat/completions"

# Mistral

export MISTRAL_API_KEY="your-mistral-api-key"

export RSV_MISTRAL_BASE_URL="https://api.mistral.ai/v1/chat/completions"

# Gemini

export GEMINI_API_KEY="your-gemini-api-key"

Model Detection

Reservoir automatically routes requests based on model name:

- OpenAI models:

gpt-*→ OpenAI API - Local models:

llama*,mistral*, etc. → Ollama API - Mistral models:

mistral-*→ Mistral API

Error Handling

Token Limit Errors

If your message exceeds model token limits:

{

"choices": [

{

"message": {

"role": "assistant",

"content": "Your last message is too long. It contains approximately 5000 tokens, which exceeds the maximum limit of 4096. Please shorten your message."

},

"finish_reason": "length",

"index": 0

}

]

}

API Connection Errors

{

"error": {

"message": "Failed to connect to OpenAI API: Connection timeout. Check your API key and network connection. Using model 'gpt-4' at 'https://api.openai.com/v1/chat/completions'"

}

}

Invalid Model Errors

{

"error": {

"message": "Invalid OpenAI model name: 'gpt-5'. Valid models are: ['gpt-4.1', 'gpt-4-turbo', 'gpt-4o', 'gpt-4o-mini', 'gpt-3.5-turbo', 'gpt-4o-search-preview']"

}

}

Usage Examples

Basic Request

curl -X POST "http://localhost:3017/v1/partition/alice/instance/coding/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "user",

"content": "Explain async/await in Python"

}

]

}'

With System Message

curl -X POST "http://localhost:3017/v1/partition/docs/instance/writing/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{

"role": "system",

"content": "You are a technical documentation expert. Provide clear, concise explanations."

},

{

"role": "user",

"content": "How should I document API endpoints?"

}

]

}'

Local Model (Ollama)

curl -X POST "http://localhost:3017/v1/partition/alice/instance/local/chat/completions" \

-H "Content-Type: application/json" \

-d '{

"model": "llama3.1:8b",

"messages": [

{

"role": "user",

"content": "What are the benefits of using local LLMs?"

}

]

}'

Integration Examples

Python with OpenAI Library

import openai

# Configure to use Reservoir instead of OpenAI directly

openai.api_base = "http://localhost:3017/v1/partition/alice/instance/coding"

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "user", "content": "How do I optimize this database query?"}

]

)

print(response.choices[0].message.content)

JavaScript/Node.js

const OpenAI = require('openai');

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

baseURL: 'http://localhost:3017/v1/partition/myapp/instance/support'

});

async function chat(message) {

const completion = await openai.chat.completions.create({

messages: [{ role: 'user', content: message }],

model: 'gpt-4',

});

return completion.choices[0].message.content;

}

Streaming Responses

Reservoir supports streaming responses when the underlying model supports it:

import openai

openai.api_base = "http://localhost:3017/v1/partition/alice/instance/chat"

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": "Explain machine learning"}],

stream=True

)

for chunk in response:

print(chunk.choices[0].delta.content, end="")

Advanced Features

Web Search Integration

Some models support web search capabilities:

{

"model": "gpt-4o-search-preview",

"messages": [

{

"role": "user",

"content": "What are the latest developments in AI?"

}

],

"web_search_options": {

"enabled": true

}

}

Message Storage

All messages (user and assistant) are automatically stored with:

- Embeddings: For semantic search and context enrichment

- Timestamps: For chronological ordering

- Partition/Instance: For data organization

- Trace IDs: For linking request/response pairs

Context Control

Control context enrichment via configuration:

# Adjust context size

reservoir config --set semantic_context_size=20

reservoir config --set recent_context_size=15

# View current settings

reservoir config --get semantic_context_size

Performance Considerations

Token Management

- Reservoir automatically manages token limits for each model

- Context is intelligently truncated when necessary

- Priority given to most relevant and recent content

Caching

- Embeddings are cached to avoid recomputation

- Vector indices are optimized for fast similarity search

- Connection pooling for database efficiency

Latency

- Typical latency: 200-500ms for context enrichment

- Parallel processing of semantic search and recent history

- Optimized Neo4j queries for fast retrieval

The Chat Completions endpoint provides the full power of Reservoir's context enrichment while maintaining complete compatibility with existing OpenAI-based applications, making it easy to add conversational memory to any LLM application.

Search & Retrieval

Reservoir provides powerful search capabilities for finding relevant conversations and messages across your entire conversation history. The search system supports both keyword-based and semantic similarity searches, enabling you to discover related discussions even when they use different terminology.

Search Methods

Keyword Search

Traditional text-based search that finds exact matches or partial matches within message content.

CLI Usage:

# Basic keyword search

reservoir search "python programming"

# Search in specific partition

reservoir search --partition alice "machine learning"

Characteristics:

- Fast and precise for exact term matches

- Case-insensitive matching

- Supports partial word matching

- Best for finding specific technical terms or names

Semantic Search

Vector-based similarity search that finds conceptually related messages even when they use different words.

CLI Usage:

# Semantic search

reservoir search --semantic "machine learning concepts"

# Use RAG strategy (same as context enrichment)

reservoir search --link --semantic "database design"

Characteristics:

- Finds conceptually similar content

- Works across different terminology

- Uses BGE-Large-EN-v1.5 embeddings

- Powers Reservoir's context enrichment system

Search Options

Partitioning

Scope your search to specific organizational boundaries:

# Search in specific partition

reservoir search --partition alice "neural networks"

# Search in specific instance within partition

reservoir search --partition alice --instance coding "API design"

Deduplication

Remove duplicate or highly similar results:

# Remove duplicate results

reservoir search --deduplicate --semantic "error handling"

RAG Strategy

Use the same search strategy that powers context enrichment:

# Use advanced search with synapse expansion

reservoir search --link --semantic "software architecture"

The --link option:

- Searches for semantically similar messages

- Expands results using synapse relationships

- Follows conversation threads

- Deduplicates automatically

- Limits results to most relevant matches

Search Implementation

Vector Similarity

Reservoir uses cosine similarity to find related messages:

- Query Embedding: Your search term is converted to a vector using BGE-Large-EN-v1.5

- Index Search: Neo4j's vector index finds similar message embeddings

- Scoring: Results are ranked by similarity score (0.0 to 1.0)

- Filtering: Results are filtered by partition/instance boundaries

Synapse Expansion

When using --link, the search expands beyond direct similarity:

- Initial Search: Find semantically similar messages

- Synapse Following: Explore connected messages via SYNAPSE relationships

- Thread Discovery: Follow conversation threads and related discussions

- Relevance Scoring: Combine similarity scores with relationship strength

- Result Limiting: Return top matches within context limits

Example Queries

Finding Programming Discussions

# Find all Python-related conversations

reservoir search --semantic "python programming"

# Find specific error discussions

reservoir search "TypeError"

# Find design pattern conversations

reservoir search --link --semantic "software design patterns"

Research and Analysis

# Find machine learning discussions

reservoir search --semantic "neural networks deep learning"

# Find database-related conversations

reservoir search --partition research --semantic "database optimization"

# Find recent discussions on a topic

reservoir view 50 | grep -i "kubernetes"

Cross-Conversation Discovery

# Find related discussions across all conversations

reservoir search --link --semantic "microservices architecture"

# Discover connections between topics

reservoir search --deduplicate --semantic "testing strategies"

Search Results Format

CLI Output

Search results include:

- Timestamp: When the message was created

- Partition/Instance: Organizational context

- Role: User or assistant message

- Content: The actual message text

- Score: Similarity score (for semantic search)

JSON Format

When exported, search results follow the MessageNode structure:

{

"trace_id": "abc123-def456",

"partition": "alice",

"instance": "coding",

"role": "user",

"content": "How do I implement error handling in async functions?",

"timestamp": "2024-01-15T10:30:00Z",

"embedding": [0.1, -0.2, 0.3, ...],

"url": null

}

Integration with Context Enrichment

The search system directly powers Reservoir's context enrichment:

- Automatic Search: Every user message triggers a semantic search

- Context Building: Search results become conversation context

- Relevance Filtering: Only high-quality matches (>0.85 similarity) are used

- Token Management: Results are truncated to fit model token limits

Performance Considerations

Vector Index

Reservoir maintains optimized vector indices for fast search:

CREATE VECTOR INDEX embedding1536

FOR (n:Embedding1536) ON (n.embedding)

OPTIONS {

indexConfig: {

`vector.dimensions`: 1536,

`vector.similarity_function`: 'cosine'

}

}

Search Strategies

- Keyword Search: Fastest for exact matches

- Basic Semantic: Good balance of speed and relevance

- RAG Strategy (

--link): Most comprehensive but slower - Deduplication: Adds processing time but improves result quality

Optimization Tips

- Use Specific Partitions: Reduces search space

- Keyword for Exact Terms: Faster than semantic for specific names

- Semantic for Concepts: Better for finding related ideas

- Limit Result Count: Implicit in CLI, configurable in API

Advanced Usage

Combining with Other Commands

# Search and then view context

reservoir search --semantic "error handling" | head -5

reservoir view 10

# Search and ingest related information

echo "Related to error handling discussion" | reservoir ingest

# Export search results for analysis

reservoir search --semantic "API design" > api_discussions.txt

Scripting and Automation

#!/bin/bash

# Find and analyze topic discussions

TOPIC="$1"

echo "Searching for discussions about: $TOPIC"

# Semantic search with RAG strategy

reservoir search --link --semantic "$TOPIC" > "search_results_$TOPIC.txt"

# Count total discussions

TOTAL=$(wc -l < "search_results_$TOPIC.txt")

echo "Found $TOTAL related messages"

# Show recent activity

echo "Recent activity:"

reservoir view 20 | grep -i "$TOPIC" | head -3

The search system is designed to make your conversation history searchable and discoverable, turning your accumulated AI interactions into a valuable knowledge base that grows more useful over time.

Data Management

Reservoir provides comprehensive data management capabilities for backing up, migrating, and organizing your conversation data. The system supports full data export/import, individual message management, and flexible partitioning strategies.

Export and Import

Export All Data

Export your entire conversation history as JSON for backup or migration:

# Export all messages to stdout

reservoir export

# Save to file with timestamp

reservoir export > backup_$(date +%Y%m%d_%H%M%S).json

# Export and compress for storage

reservoir export | gzip > reservoir_backup.json.gz

Export Format: Each message is exported as a complete MessageNode with all metadata:

[

{

"trace_id": "550e8400-e29b-41d4-a716-446655440000",

"partition": "default",

"instance": "default",

"role": "user",

"content": "How do I implement error handling in async functions?",

"timestamp": "2024-01-15T10:30:00.000Z",

"embedding": [0.123, -0.456, 0.789, ...],

"url": null

},

{

"trace_id": "550e8400-e29b-41d4-a716-446655440001",

"partition": "default",

"instance": "default",

"role": "assistant",

"content": "Here are several approaches to error handling in async functions...",

"timestamp": "2024-01-15T10:30:15.000Z",

"embedding": [0.234, -0.567, 0.890, ...],

"url": null

}

]

Import Data

Import message data from JSON files:

# Import from a backup file

reservoir import backup_20240115.json

# Import from another Reservoir instance

reservoir import exported_conversations.json

# Import compressed backup

gunzip -c reservoir_backup.json.gz | reservoir import /dev/stdin

Import Behavior:

- Validates JSON format and MessageNode structure

- Preserves all metadata including timestamps and embeddings

- Maintains partition/instance organization

- Skips duplicate messages (based on trace_id)